Infor KB article 1667935 offers a list of sample flows and scripts used to carry out certain needs in Lawson.

Download Sample Flows Scripts

Preamble

During the course of providing support for Infor Process Automation, I periodically find the need to create a process flow or short script to satisfy some need that I have. This KB article is a place you can look for some example flows and scripts. The content here is built as examples of techniques and are not intended for executing within a Production environment without modification.

FP.pl – (perl script)

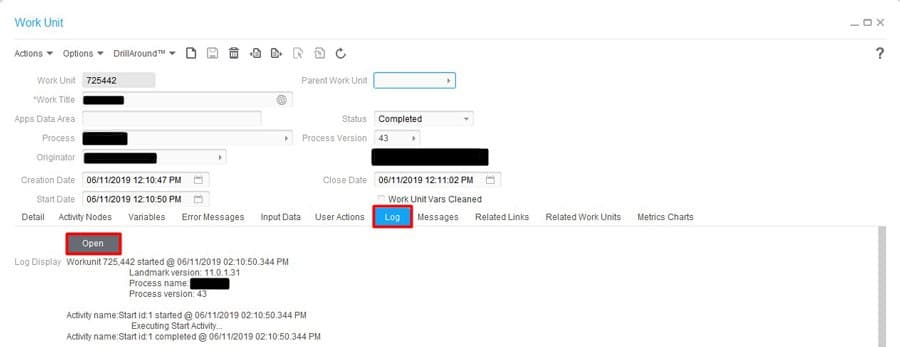

This perl script accepts a file as input, such as a workunit.log that is too large to open in a text editor, and breaks it into smaller pieces. Execute from a command window:

C:\>perl FP.pl -f C:\folder\filename.ext -n 100000

This would create new files C:\folder\filename1.ext, C:\folder\filename2.ext each with 100,000 lines.

LogReporter.pl – (perl script)

This perl script is used for determining where a process flow is spending all of it’s time processing. It is packaged in a zip file, when unzipping this the LogReporter.pl should be inside of a folder that contains a /lib folder which contains a required perl module. To run the perl script save off a workunit log that was captured at “Workunit Only” log level. Then from a command window, cd to the directory where LogReporter.pl exists and execute:

perl LogReporter.pl -f C:\folder\workunit.log

This will create two csv files, one being a report of where the flow spent all of it’s time.

IPABP-FileAccess-IntermittentWrite.lpd

This is a sample process flow which provides three examples that achieve the same result. The first technique stores all data in a message builder node, then writes to a file once. This technique is only possible if the process flow does not stop at a user action node or wait node, which would wipe current variable values. The second example makes a write to append the file once per record returned. I recommend avoiding this strategy as there is overhead to opening a file, writing, and closing the file repeatedly. The third example shows how to store data for X records, then periodically writing the data to a file. This is the recommended technique as it minimizes the memory footprint stored in memory, and eliminates the need to write to the file for every record.

IPABP-CSVFile-SQLCMD.lpd

This sample flow makes use of SQL Server’s Database utility to obtain a CSV file directly from the database. A technique like this can save significant resources and time to build a csv file from within a flow. The command we run is:

SQLCMD -S . -d DATABASENAME -U username -P password -s, -W -Q “SELECT PFIWORKUNIT, FLOWDEFINITION, FLOWVERSION, WORKTITLE, PFIFILTERKEY, FILTERVALUE FROM [DATABASENAME].[SCHEMA].[PFIWORKUNIT]” | findstr /V /C:”-” /B >

Microsoft sqlcmd utility documentation: https://docs.microsoft.com/en-us/sql/tools/sqlcmd-utility?view=sql-server-2017

TIP: Adding -E, instead of -U -P, will cause the command to use a trusted connection and pass windows credentials to the database. Running this command from within a flow means the command is actually going to execute as the user that Landmark is running as. It will pass those credentials to the Database, if you add a Windows Authentication login for that user you won’t have to pass the user and password in plain text from a process flow.

TIP: The -h flag can be used to eliminate header row (-h -1) would be no headers. (-h 100) would print the headers every 100 rows of data.

IPABP-WorkunitGovernor

Periodically we see a flow that is deemed critical to the business, and there are also a few less important process flows that can take alot of time to run. In this scenario it is possible to trigger several less important flows that will occupy all available workunit threads. This causes important flows to wait for an available thread to process on. This is a sample of a technique that you could use to limit the number of less important flows that can run at the same time.

The flow is a simple Landmark transaction to count how many workunits with the same name are currently processing. If that number is higher than the value we set, the flow will wait for a few minutes then get another count. We are leveraging the wait node, which parks the workunit and makes its workunit thread available to other flows.

IPABP-JobSubmit and IPABP-JobWait

NOTE: Due to a bug in Landmark Runtime Environment, you must be above Landmark 10.1.1.10 for this flow to work

Lawson System Foundation deprecated the old cgi-lawson/jobrun.exe command in LSF10, and replaced all these cgi executables with lawson-ios/action servlets. I developed this example flow as a way to submit a job and have process automation wait for the job to finish before moving on. The benefit of this technique is it does not consume a workunit thread while the job is executing in LSF.

It works like this: You have a main flow (IPABP-JobSubmit) which uses a trigger node to launch IPABP-JobWait. When it triggers the JobWait flow it passes all the information that is required to execute the job, then JobSubmit immediately goes to a User Action node which drops it from processing and returns the workunit thread. JobWait submits the job then cycles through a WAIT node, periodically checking on the status of the job. When the job completes, JobWait will take an action on the Job Submit which wakes that flow up to continue processing.

IPABP-Multithread-S3Query

Before we found the performance improvements of using the Java Interpreter available in Landmark 10.1.1.48 there were times we needed to have some large running flows execute faster. This flow is an example where a S3 Query returned alot of records and we needed to perform another action for every single record that was returned. To improve the processing time we broke this up so that the S3 Query was executed simultaneously in multiple workunits, instead of a single workunit.

This sample collects a set of KEYS for the table we are querying inside LSF; then triggers a new workunit to handle that group of records.

IPABP-BackDatedProxyUserApproval & IPABP-ResetWorkunitsForNewProxies

In Infor Process Automation 10.1.1.x we introduced some new features. One of the new features is the concept of creating a Proxy User; this allows a Process Flow Approver to create a proxy that grants another user access to fill in for them while they are away. When this feature was developed, to avoid problems with pre-existing code we could not safely grant the proxy user access to pre-existing work items.

So if a user is assigned 2 work items on Wednesday, then sets up a proxy for another user to cover for them. Even if you back date the proxy access to Tuesday, the new proxy user will not see the items created Wednesday. So I came up with the following concept:

- If I can design my approval flow with this limitation in mind, I can have an action on every User Action node that simply leaves the current UserAction node then comes right back to the same UserAction

- I can design another flow to find all workunits that are waiting on a useraction, and have that flow execute this special action which would cause those flows to leave the current UA and return

Why? Because this means after I create a proxy I can run my Proxy Reset flow to have a flow leave and return thus creating new Work Items which would include the new proxy user.

The method I am describing works, but it make not be for everyone. In this instance I am stealing the Timeout action so that regular users can not see my “Reset Action”, but if you already use a timeout addition modification to this concept would likely be required.

IPABP-LargeHTMLContentInVariable

There have been several times where I have encountered a client looking to simply build a HTML content display exec to show a header record, and all detail lines. Like REQHEADER and REQDETAIL, however since the database is limited to 2048 characters for a string variable. If the flow has multiple levels of approval, after the flow stops at the first variable, the perfectly built HTML is now truncated down to 2048.

NOTE: If you trigger your flow from a File Channel, the Input Data variable is already populated and this approach won’t work for you. Essentially this flow builds the HTML, then makes a Landmark Transaction to create a PfiWorkunitInputData record to store the HTML, then the flow uses a short term wait node. The purpose of the wait node is so that when the workunit restarts it loads PfiWorkunitInputData into the variable where it can now be used anywhere else in the flow.

IPABP-LogLevelAdjuster (Windows Landmark)

This process flow is designed to update all Process Flow Definitions, it sets the Workunit log level to “Workunit Only, it sets the CancelCheckFrequency to “Intermittent @ 30 seconds”, it disables CaptureActivityStartandEnd times. For efficiency sake this is the recommended settings for running a flow inside of a Production environment unless you are specifically troubleshooting an issue with the flow.

NOTE: This flow contains 2 variables in the start node that must be updated before implementing it. The “BaseLmrkDir” variable needs to be pointed to the absolute path of the Landmark directory where the “enter.cmd” command resides. You must also use \\ instead of \ as we are storing the directory in a javascript string. For example: D:\\lmrkprod if there are two directories be sure to use double backslashes for all directories D:\\Landmark\\prod.

Also, you should modify the email address to your own email address.

UNIX – You should be able to modify this flow fairly easy to work for UNIX as well. You simply need to modify the system command node so that it can run a landmark command. cd /landmarkdirectory && enter.sh && listdataareas. Everything else is landmark based.