Whether you are refreshing your test LBI environment or moving all your data to a new database server, you may eventually need to migrate your report data for LBI. This is a relatively simple process, provided the LBI instances using the data are the exact same version and service pack level.

First, back up your LBI databases on the source server and restore to the destination server (LawsonFS, LawsonRS, LawsonSN).

If you are migrating data for one LBI instance, you just need to point your WebSphere data sources to the destination server.

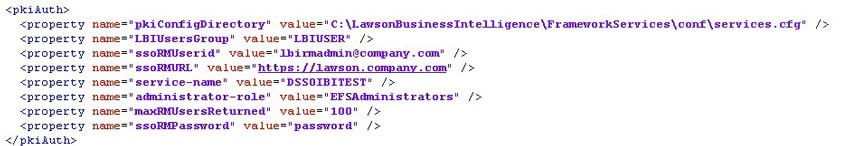

If you are migrating data for a new LBI instance, or for your test environment, you’ll need to update all the services and references to the old LBI instance. In the LawsonFS database, ENPENTRYATTR table, you’ll need to search the ATTRSTRINGVALUE column for your old server name, and replace it with the new server name. For example,

UPDATE ENPENTRYATTR

SET ATTRSTRINGVALUE = REPLACE(ATTRSTRINGVALUE, ‘source-server’, ‘destination-server’)

WHERE ATTRSTRINGVALUE LIKE ‘%source-server%’

After you update those strings, you will need to redo your EFS and ERS install validators to set the correct URL.

- http(s)://lbiserver.comany.com:port/efs/installvalidator.jsp

- http(s)://lbiserver.comany.com:port/ers/installvalidator.jsp

- http(s)://lbiserver.comany.com:port/lsn/admin/installvalidator.jsp

Next, log into LBI and go to Tools > Services. Click on every service definition to look for the source server name, and update with the destination server name.

Make sure your data sources are pointing to the proper ODBC DSNs, and/or add new ODBC connections. Test and verify all your reports.