There are two places where GL distribution information resides. One is in MMDIST. If the item is a special item or not setup on IC12, then MMDIST is where you will find the GL distribution information.

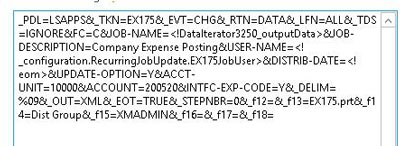

Keep in mind that a PO line might have started as a requisition and the GL Distributions were added then. When you are looking for PO line distributions, make sure you look for the document type of PO and specify the company and the document number that matches the PO you are looking for.

If the item is on IC12 it is either an inventory item or a non-stock item that is found at an IC location. All items that are setup on IC12 will have a GL Category assigned to them and that is where the GL distribution information can be found for these items. The key fields here are the Company – IC Location – Item. You will be able to find the GL distribution information for these items using these key fields on the GL Category table.